Only 1.9% of People are able to Differentiate between AI Generated and Human Faces — Deepfake Study by HumanOrAI.IO

HumanOrAI.IO recently launched a unique social game, "Human or AI?", where participants are challenged to differentiate between AI-generated images and real human images. This game is designed as a social study of Deepfake image identification by general users.

Participants are shown five images and need to guess which ones are real and which are AI-generated. It's a fast, fun challenge that takes just 30 seconds to complete.

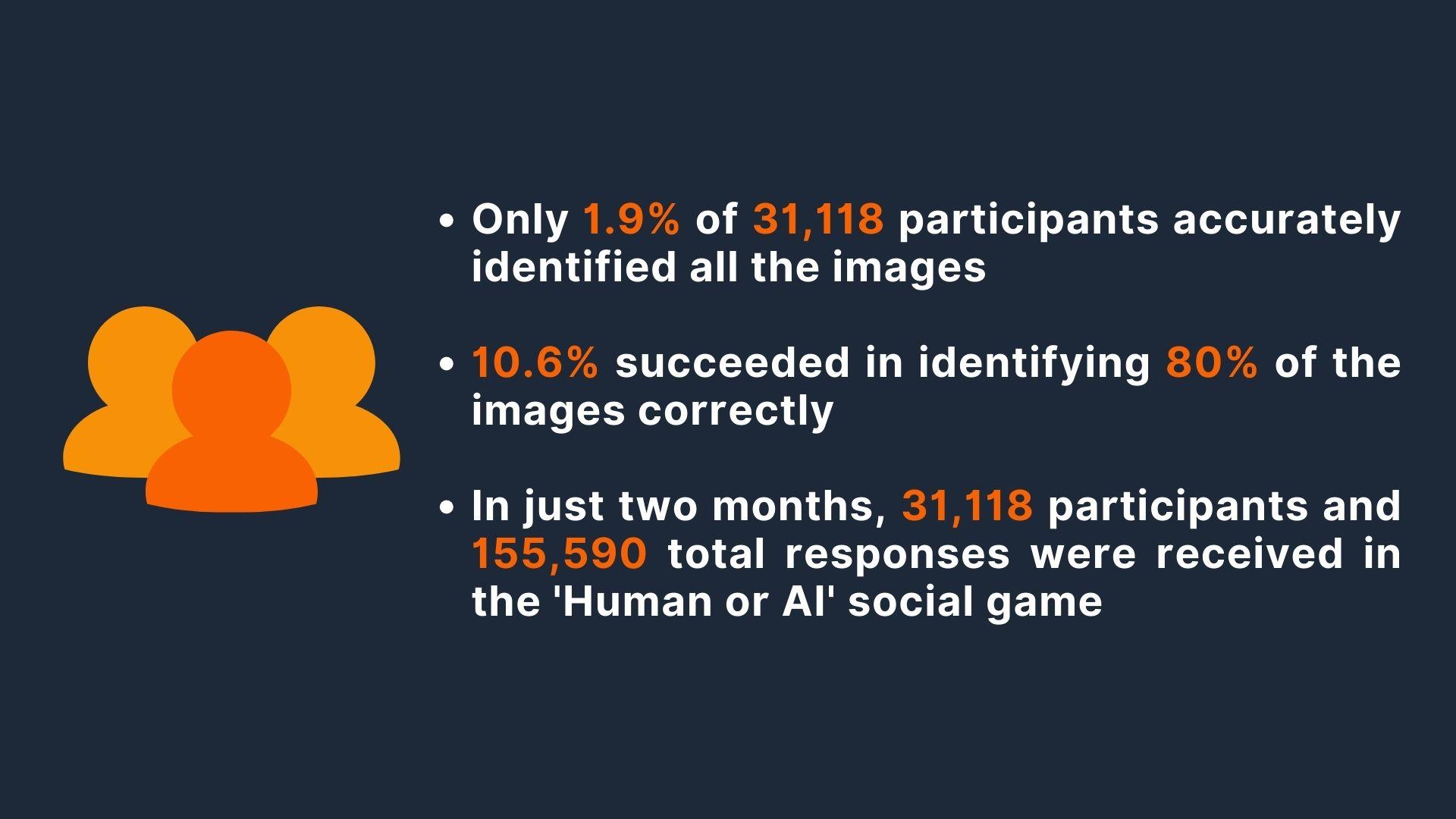

In just two months, we've observed fascinating results. Among the 31,118 participants, only 1.9% accurately identified all five images.

Key Statistics

- Only 1.9%of 31,118 participants accurately identified all five images.

- 10.6% succeeded in identifying more than four out of five images correctly.

- In just two months, 31,118 participants and 155,590 total responses were received in the 'Human or AI' social game.

This shows that AI technology have already become so advanced in creating images and videos close to reality and its not just possible for general audience to differentiate between deepfake images and real images.

Deepfake images and videos have already proliferated into the social media and its only going to become worse. We need advanced AI detection tools along with legal laws to watermark AI outputs to contain this.

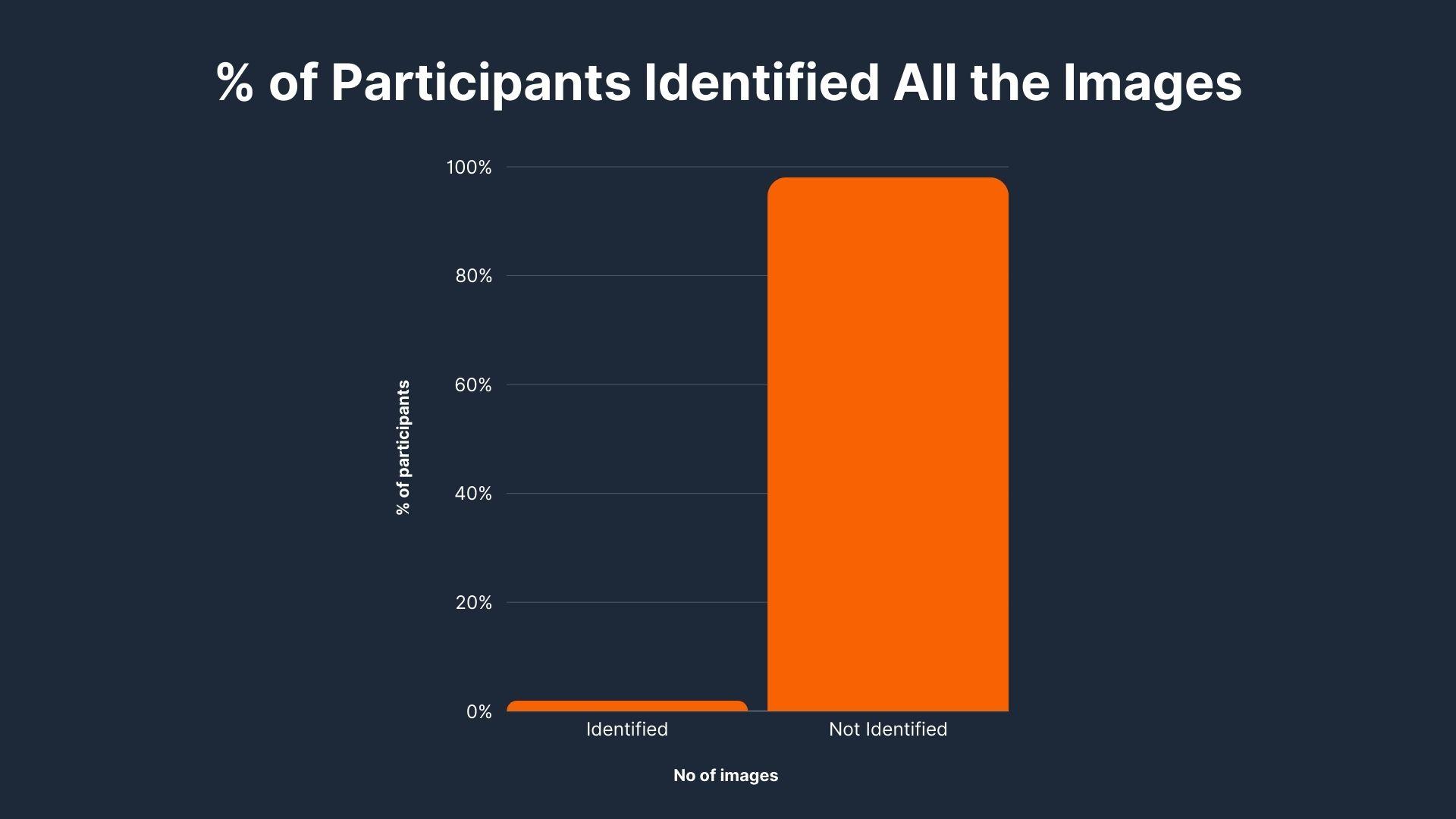

Only 1.9% of 31,118 Participants Accurately Identified All the Images

| Total Participants | Identified All Five Images | Not Identified All Five Images |

| 31118 | 607 | 30511 |

Only 607 out of 31118 participants could guess all five images correctly. This shows it's challenging to tell real and AI-generated images apart. A small group of participants managed this task.

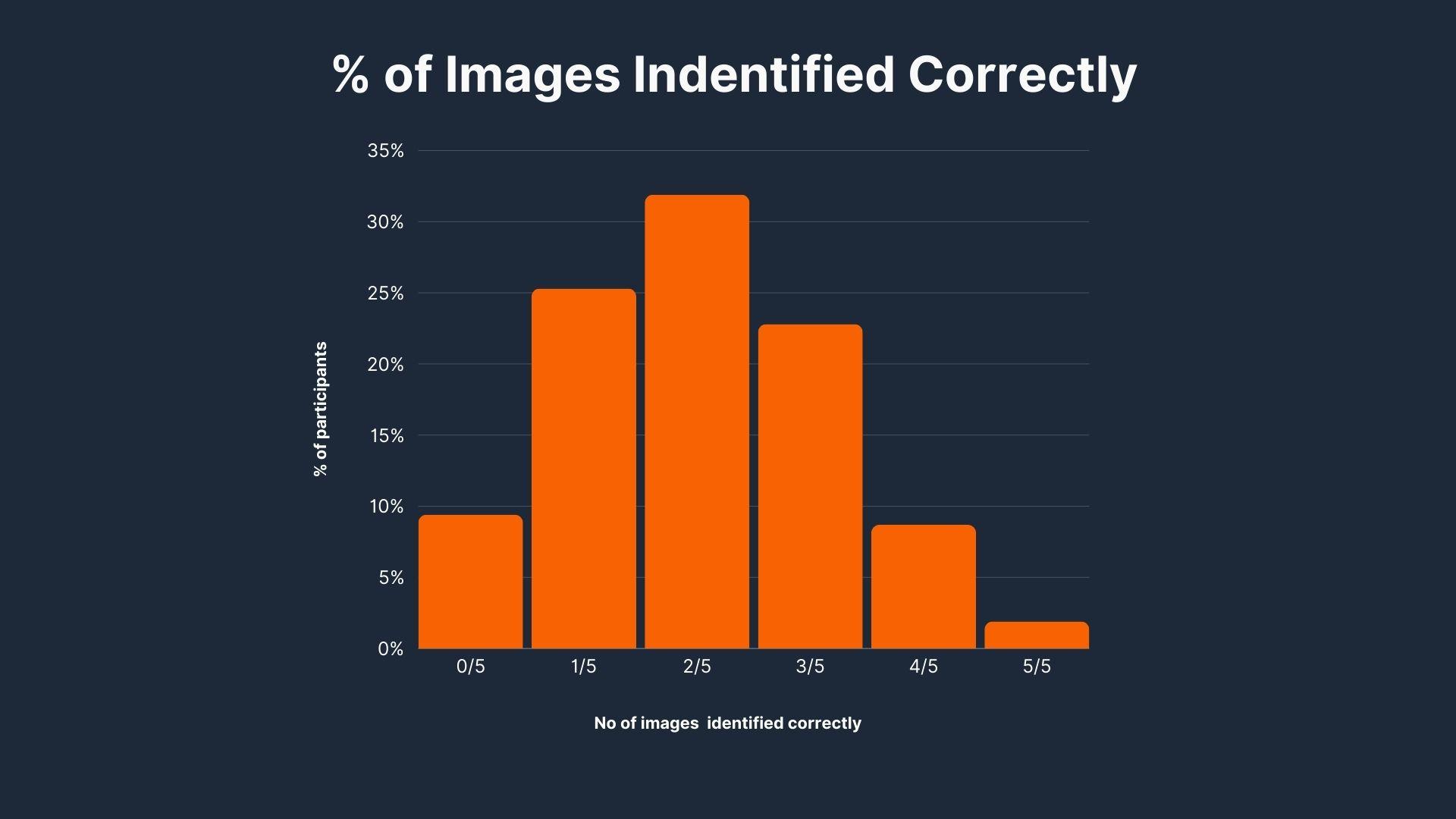

10.6% Succeeded in Identifying Either Four or Five images Correctly

Total Participants: 31,118 (updated data)

| Number of Images Identified Correctly | Participants Count | Percentage |

| 0 out of 5 | 2919 | 9.4% |

| 1 out of 5 | 7897 | 25.3% |

| 2 out of 5 | 9952 | 31.9% |

| 3 out of 5 | 7096 | 22.8% |

| 4 out of 5 | 2717 | 8.7% |

| 5 out of 5 | 607 | 1.9% |

Out of a total of 31,118 participants in the 'Human or AI' game, the success rates varied significantly. While a majority struggled with accurate guesses: 9952 identified two images and 7096 identified three correctly.

A smaller fraction displayed higher accuracy: 2717 participants correctly identified four images, and 607 correctly identified all five. Together, they make up 10.6% of the total, successfully identifying more than four out of the five images. This data highlights the varying ability of participants to distinguish between real and AI-generated images.

Conclusion

In a world filled with deepfake images, the first two months of the "Human or AI" game have shown us how hard it can be to tell real from AI-generated images. The statistics are eye-opening: only 1.9%of the 33,118 participants could correctly identify all five images, while 10.6% were able to identify either four or five images correctly. 59.4% of attempts worldwide were wrong.

In the United States, where most people played, only 0.91% could identify all the images.

These figures from just two months of gameplay remind us how crucial it is to keep up with AI advancements. As this game updates its findings weekly, it will provide an ongoing snapshot of how well people around the world are adapting to the ever-blurring line between real and AI-generated content. This knowledge is vital for everyone, especially as technology continues to advance rapidly.

Play the Game Here

Ready to test your skills at distinguishing between AI-generated images and real human images? Play "Human or AI?" now and see how well you do! Just click the link below to start the game - no registration required.

Data Updated Weekly

Keep in mind, the data from "Human or AI?" is updated weekly. This means you get fresh insights on how participants worldwide are doing in the game. Check back regularly to see the latest trends in image recognition accuracy!

Our Methodology for the Experiment:

Objective:

The primary goal of our experiment was to explore the ability of everyday users to differentiate between AI-generated images and real human faces. This study is part of our broader effort to understand public perception and awareness of AI-generated visual content.

Selection of Images:

For this experiment, we carefully curated a set of 40 images - 20 authentic human faces sourced from stock photos and verified with their social profiles, and 20 AI-generated using GAN image generation model.

Design and Structure:

humanorai.io, was designed to be intuitive and user-friendly, ensuring a seamless experience for participants. Displayed series of 5 images to each user, asking them to tag each image as either an AI-generated image or a real human face.

How We Conducted This Experiment

User Engagement:

Over two months, we engaged with approximately 31,118 users, resulting in around 155,590 individual responses. Our approach was to reach a diverse audience to ensure a wide range of perceptions and opinions.

Data Collection:

Each response was carefully logged and stored for analysis. Our system was designed to prevent biases and ensure the authenticity of the data collected. We collect certain data like your IP address and a unique user ID to enhance your experience and provide meaningful game results. Note that we only store the results of your first game play; subsequent plays are allowed but not stored.

Analysis:

Post-experiment, our team conducted a thorough analysis of the data. This involved not only quantifying the results but also looking for patterns and insights into how different demographics interacted with AI-generated versus real images.

Ethical Considerations:

We took great care to adhere to ethical standards. This included ensuring the privacy of participants and using images that were either publicly available or sourced from platforms that permit such use for research purposes.